Engineering Reliable Human-in-the-Loop Architectures in n8n

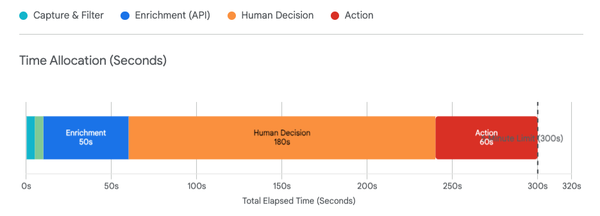

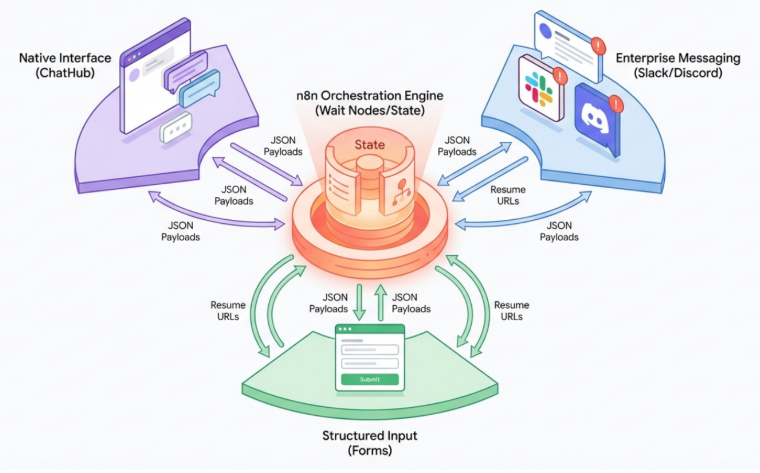

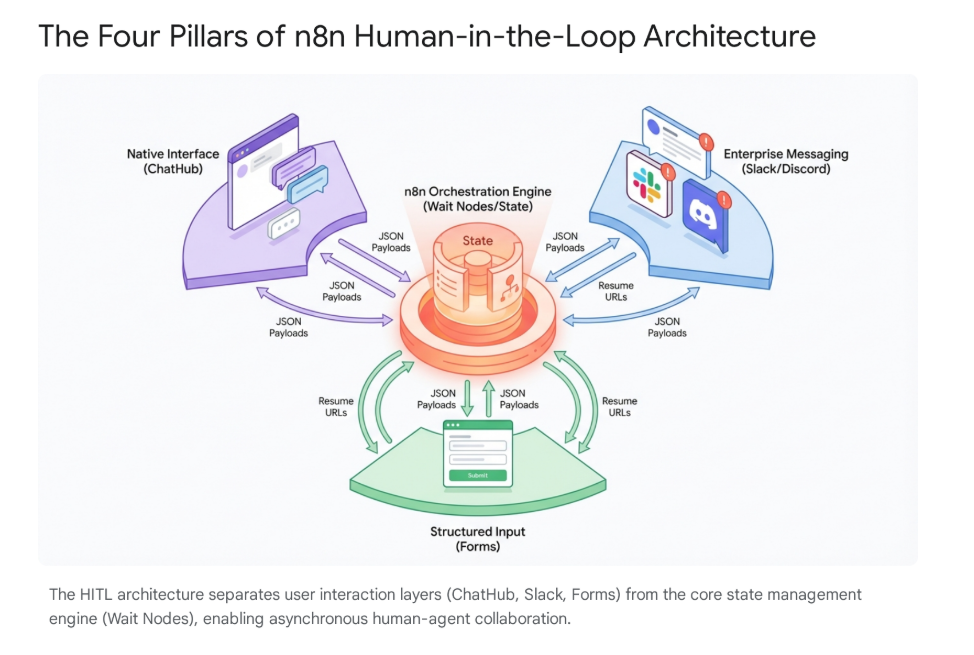

We often discuss Human-in-the-loop (HITL) merely as a safety guardrail—a "pause" button to prevent AI errors. However, from an engineering perspective, it represents a complex state management challenge that most standard automation stacks fail to handle gracefully.

Unlike a standard script that executes linearly in milliseconds, an agentic workflow is asynchronous and stateful. It might need to pause for days while a Vice President reviews a contract or compliance checks are run, all while the system must retain its memory, variable context, and security tokens without consuming active server resources.

Based on our recent deep dives into the n8n ecosystem (specifically v2.1), we have identified specific failure modes in standard deployments. Below is the architectural framework we are adopting to ensure these systems are robust, secure, and production-ready.

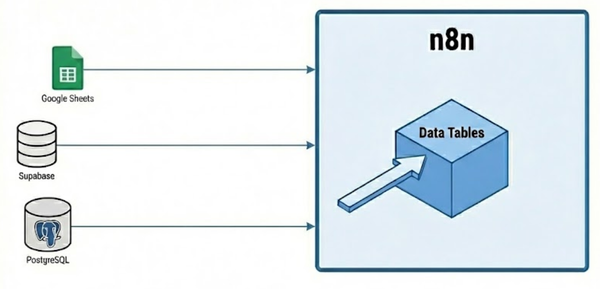

1. The Core Engine: Asynchronous State Persistence

The foundation of any interactive agent is the Wait Node, but its function is often misunderstood. When an agent pauses to ask for input, it cannot simply "sleep" in server memory. Keeping a process alive for days is volatile (data is lost on server restarts) and expensive (RAM consumption scales linearly with active workflows).

The Serialization Pattern:

A robust architecture must "serialize" the entire execution state. When the Wait Node is triggered, n8n snapshots the current execution context—every variable, every binary file, and the entire reasoning chain up to that point—and commits it to a persistent database (PostgreSQL or SQLite). The active worker process then terminates, freeing up compute resources.

The Resume Mechanism:

The key to waking the process is the Resume URL, a cryptographically unique endpoint generated for that specific execution. This URL acts as the temporal bridge. When triggered, the system retrieves the frozen state from the database, deserializes it, and resumes execution exactly where it left off.

- Bi-Directional Data: Crucially, this is not just a binary trigger. We can inject data payload into the Resume URL (via query parameters or POST body). This allows for "Feedback Loops," where a human doesn't just click "Approve," but provides specific text feedback ("Rewrite paragraph 3") which is injected directly into the agent's context window upon resumption.

Architectural Risk: The "Localhost Trap"

A common failure mode in self-hosted environments involves misconfigured ingress variables (WEBHOOK_URL). If not strictly defined, the system generates internal Resume URLs (e.g., pointing to localhost) that are unreachable from the outside world.

- Mitigation: We must ensure strict Fully Qualified Domain Name (FQDN) configuration to guarantee that approval links generated by the agent are actionable by users on public networks (Email, Slack, mobile).

2. Native Interaction: The new ChatHub v2.1 Standard

For internal tools and "Co-Pilot" interfaces, we are moving away from custom React frontends. n8n's ChatHub has become the standard, unifying session management, file handling, and token streaming into a single component.

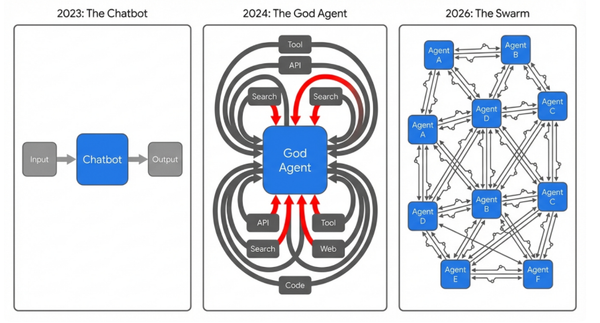

We are seeing a split in agent design patterns here:

- Personal Agents (Configuration-Centric): These are lightweight wrappers around models, similar to custom GPTs (although without attached vectorized knowledge.) They are useful for simple Q&A but lack deep integration.

- Workflow Agents (Orchestration-Centric): This is the enterprise standard. Here, the chat window is merely an interface for a complex backend process. The "Chat Trigger" initiates a workflow that may perform SQL lookups, API actions, or vector searches before the AI model is ever invoked.

Critical Pattern: Solving "Session Amnesia"

A frequent bug in production is the "ghost execution." When a user refreshes their browser, the frontend fires a loadPreviousSession event. If the backend workflow treats this as a standard message, the agent may hallucinate a response or re-run expensive logic.

- The Fix: We must implement strict routing logic immediately after the trigger. A Switch node must distinguish between a

sendMessageaction (which triggers the AI reasoning loop) and aloadPreviousSessionaction (which simply queries the chat history database and returns it, bypassing the AI entirely).

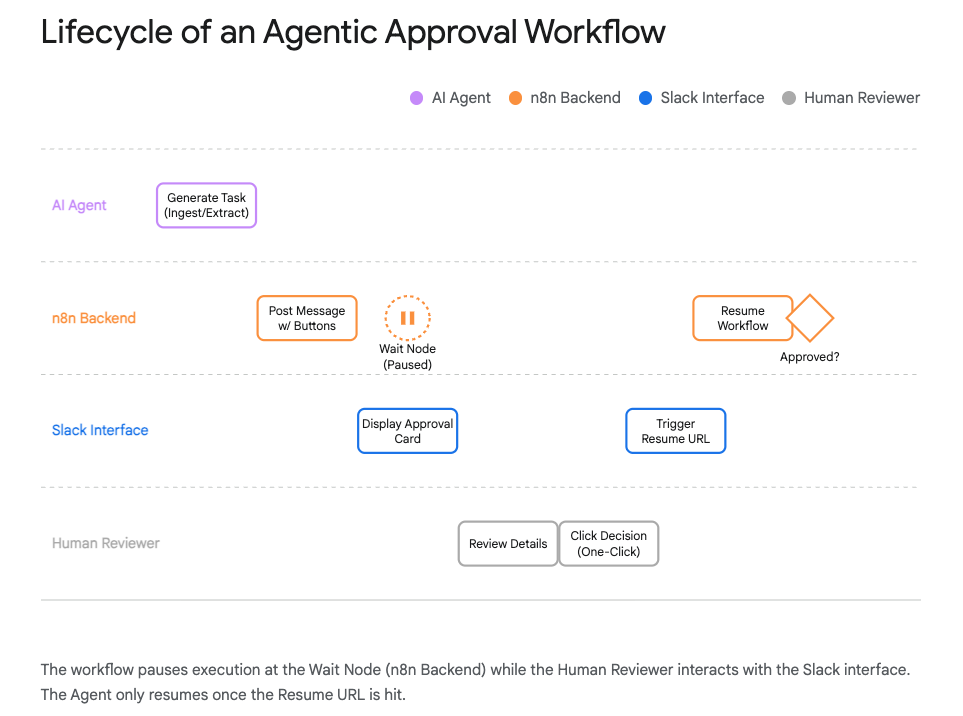

3. Headless Interaction: Managing Race Conditions in Slack/Discord/Teams

For most executives, the "interface" is simply the messaging platform they already use. However, integrating stateful agents into stateless platforms like Slack, Discord, or Teams introduces asynchronous complexity.

The "Dissolving Button":

Network latency often causes users to "double-click" approval buttons. In financial workflows (e.g., invoice payment), this race condition is catastrophic.

- The Pattern: We are implementing a "dissolving" UI pattern. The moment a workflow resumes, its first action is to call the platform API to update the original message. It removes the interactive buttons and replaces them with a static text block: "✅ Approved by [User] at [Time]".

- Benefit: This enforces idempotency (the action physically cannot be triggered twice) and creates a permanent, clean audit trail within the chat history.

The "Deferred Response":

Platforms like Discord enforce strict 3-second timeouts on interactions. Real AI work—such as RAG lookups or document analysis—takes significantly longer. If we process synchronously, the interaction times out and fails.

- The Pattern: We must use a "Defer → Process → Edit" loop:

- Ack: Immediately send a "Thinking..." signal (HTTP Type 5 or Ephemeral Message) to satisfy the platform timeout.

- Process: The workflow continues the heavy logic in the background.

- Edit: Once complete, the workflow uses the interaction token to programmatically edit the original placeholder message with the final result.

4. Structured Interaction: Context Seeding with Forms

While chat is powerful, it is a poor interface for structured data entry. Asking an LLM to "interview" a user for 10 specific data points (Tax ID, Budget Codes, Dates) introduces a high risk of hallucination and extraction errors.

The "Context Seeding" Pattern:

We are shifting to a hybrid model. Instead of starting with an empty chat context, the workflow triggers a structured form (e.g., n8n Form Trigger).

- Mechanism: The user fills out validated fields. The workflow then takes this structured JSON output and injects it directly into the Agent’s System Prompt.

- Impact: This ensures the agent begins its reasoning chain with 100% accurate, structured data. It eliminates the "garbage in, garbage out" cycle common in conversational data extraction. This pattern is also effective for mid-workflow feedback: if an agent gets stuck, it can email a form link to the user, wait for the structured response, and then resume.

5. Complex Orchestration: Fan-Out/Fan-In

For multi-stakeholder approvals (e.g., Legal + Finance + Compliance), a linear wait (A then B then C) is too slow. However, standard "parallel" execution fails because the main workflow cannot easily wait for all branches to finish before proceeding.

The "Collector" Pattern:

We are employing a decoupled Fan-Out/Fan-In architecture:

- Fan-Out: The main workflow triggers three parallel sub-workflows (one for each department) and then terminates. It does not wait.

- Execution: Each sub-workflow handles its specific approval logic independently.

- Fan-In (The Collector): Upon completion, each sub-workflow writes its status to a shared state store (e.g., Redis or a dedicated SQL table) tagged with a

BatchID. - Trigger: A final "Check" process runs after each write. If

Completed_Count == Total_Required, it triggers the finalization workflow.

Summary

The difference between a demo and a production system is the "connective tissue" that manages the wait. By standardizing these patterns—state serialization, dissolving buttons, context seeding, and collector orchestration—we can deploy agents that are not just smart, but reliable components of the enterprise stack.

Is your team running HITL processes in n8n yet? I'd love to hear how you're handling it.

Troy