Moving Beyond expensive lead enrichment SaaS subscriptions with Gemini

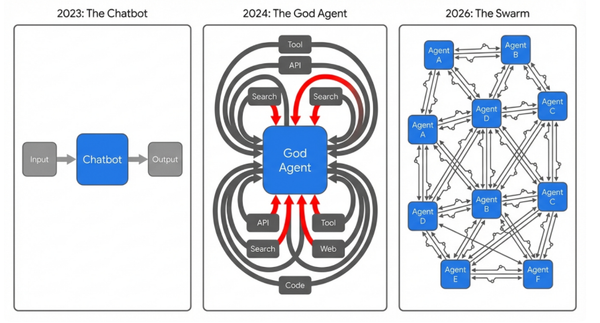

The enterprise technology landscape is currently navigating a fundamental phase transition, moving from a paradigm of software consumption to one of cognitive orchestration. For the better part of the last decade, sales development and data enrichment relied heavily on third-party SaaS aggregators—platforms such as Clay, ZoomInfo, or Clearbit.

These platforms served a vital, albeit expensive, purpose: they functioned as intermediaries, bridging the gap between the raw, unstructured chaos of the open internet and the structured, relational databases required by modern revenue teams. They monetized what was effectively an "API gap," charging significant premiums to bundle data retrieval, normalization, and elementary logic into user-friendly interfaces. Organizations "rented" this intelligence, paying per-record fees that scaled linearly with growth, often trapping them in a cycle of diminishing returns where the cost of customer acquisition (CAC) inflated alongside the volume of outreach.

However, the maturation of the Google AI ecosystem in late 2025—specifically the deep interoperability between Google Sheets, Google Workspace Studio, and the Gemini API—has effectively collapsed this gap. The technological capability to "read a spreadsheet row, search the live web for context, reason about a prospect's fit against an Ideal Customer Profile (ICP), and write a decision back to the database" is no longer a specialized feature exclusive to niche SaaS providers. It has become a commodity capability inherent to the underlying cloud infrastructure.

This democratization of reasoning power signals the end of the "rentier" model of data enrichment. Organizations can now architect their own intelligence systems using the raw materials of cloud compute, shifting from "renting" intelligence to "owning" the reasoning engine.

Here is the architectural blueprint for replicating—and exceeding—the capabilities of a "Clay-style" enrichment waterfall using Google’s native stack.

The Economic Case: Raw Materials vs. Credits

To fully appreciate the strategic value of building this system, one must first deconstruct the economic mechanics of the incumbent model. SaaS platforms effectively function as "logic wrappers." Their primary value proposition is the reduction of friction; they charge a premium for the convenience of chaining disparate API calls (e.g., "Find LinkedIn URL" -> "Scrape Profile" -> "Ask GPT-4") into a coherent interface.

The cost structure of these platforms is typically "Credit-Based," a model designed to abstract the underlying cost of compute. A user pays for a subscription tier that grants an allowance of credits. Complex actions often consume multiple credits per row, obscuring the massive markup being applied to the raw cost of intelligence.

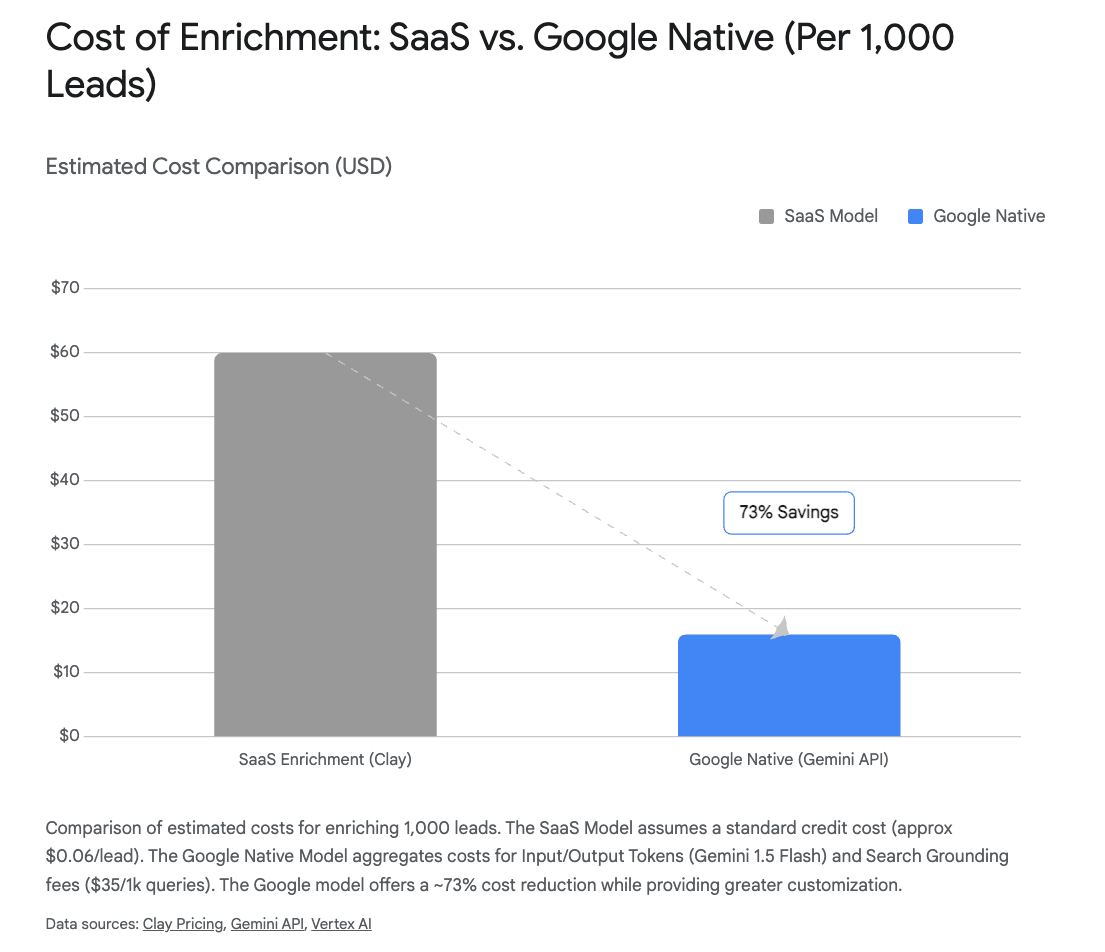

- SaaS Costs (The Markup): Analysis indicates that comprehensive enrichment via platforms like Clay can range from $0.02 to over $0.10 per enriched row, depending heavily on the complexity of the waterfall. As organizations scale from thousands to tens of thousands of leads, this arbitrage creates a punishing cost curve.

- Direct API Costs (The Arbitrage): In the Google ecosystem, the enterprise pays for the raw materials of intelligence: input/output tokens. By owning the reasoning engine directly via the Gemini API, costs drop to approximately $0.001–$0.005 per lead. You are essentially accessing the intelligence at wholesale prices, eliminating the middleman markup.

- The Primary Cost Driver: The main expense in the Google stack is "Grounding with Google Search" ($35 per 1,000 queries). While this is the most expensive component, it is critical for retrieving live data—such as a company's current pricing page or a prospect's LinkedIn headline—rather than relying on stale training data. Even with this fee, the total cost per lead is often significantly lower than the effective per-lead cost of premium SaaS credits that wrap similar functionality.

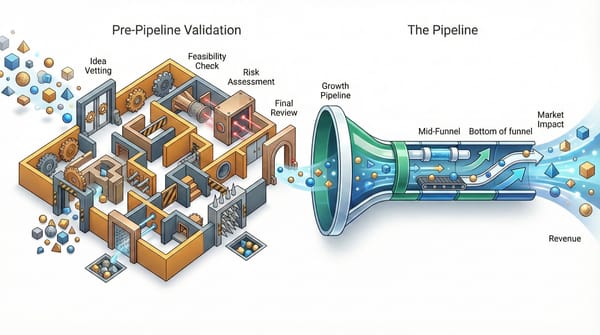

The Architecture: Two Distinct Streams

A common misconception among business users is that a single tool—like the "Gemini in Sheets" side panel—can handle all use cases. A robust "Sovereign Solution" splits workloads into two distinct streams to optimize for both cost and latency.

1. The Bulk Stream (High Volume, Low Cost)

The user’s primary requirement is often the "Bulk Case": the ability to evaluate every lead in a Google Sheet, one by one, to determine ICP fit.

- The Problem with Consumer Tools: Do not use the native "Gemini in Sheets" feature for this high-volume task. It is designed for synchronous assistance (manual prompting), not asynchronous automation. It lacks the "agentic loop" required to iterate through 5,000 rows autonomously, and it is subject to strict, often opaque rate limits that will throttle bulk jobs immediately.

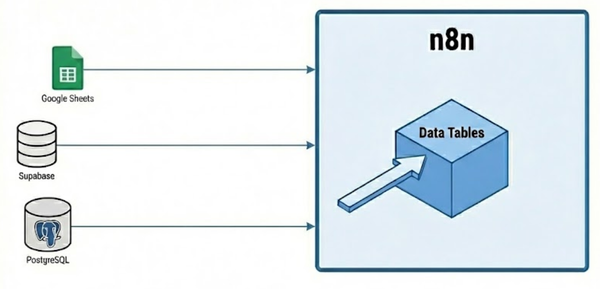

- The Solution: The robust architecture decouples the interface from the intelligence. Google Sheets serves merely as the database. The "Brain" is the Gemini API, and the "Hand" that moves data is Google Apps Script.

- Method: The script acts as the orchestrator. It iterates through the rows, constructing a prompt that explicitly requests a Google Search (e.g., "Find the current job title of [Name] at [Company] and determine if they match our ICP of 'B2B SaaS with >$10M revenue'"). The API uses the

Google Searchtool to validate data against live web information and writes the structured decision back to the sheet. - Optimization (Batch Mode): For large lead lists, utilize the Gemini API Batch Mode. This allows you to send massive volumes of prompts (e.g., 50,000 records) in a single file to be processed asynchronously by Google. This approach offers a 50% discount on API calls and creates a fixed-cost infrastructure where the marginal cost of analyzing the next 1,000 leads is negligible.

2. The Real-Time Stream (Low Latency)

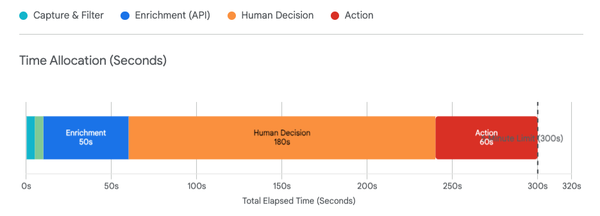

The second requirement is handling leads "as they arrive." This describes an event-driven architecture where the goal is zero latency: a lead fills out a form, and within seconds, they are analyzed, scored, and a draft response is prepared. The "Bulk" approach is too slow here.

- The Solution: Use Google Workspace Studio (formerly Workspace Flows) for team automation. It is designed for "Trigger-Action" workflows, allowing business users to build agents using natural language without writing extensive code.

- Trigger Mechanism: A critical finding is that native "New Row" triggers in Sheets can be unreliable or suffer from polling latency. Instead, use Google Forms triggers to initiate the flow immediately upon data arrival. This guarantees a structured payload and ensures zero-latency engagement.

- Logic (Probabilistic vs. Deterministic): Implement "Decide" nodes that utilize probabilistic logic. Traditional automation relies on brittle keyword matching (e.g.,

IF industry == "SaaS"). Workspace Studio nodes use the LLM to make semantic judgment calls (e.g., "Analyze the input data. Does this company's business model rely on recurring revenue? If ambiguous, assume 'Maybe'."). This allows the system to handle nuance and unstructured data far more effectively than rigid logic gates.

Strategic Implications

Adopting this architecture allows the enterprise to pay for the raw materials of intelligence (tokens and search queries) rather than a subscription tier. But the shift goes beyond simple cost savings; it represents a gain in strategic agility.

In the "rentier" model, you are constrained by the feature roadmap of your vendor. If you want to change your enrichment logic, you often have to wait for a platform update or pay for a higher tier. In the "sovereign" model, you own the prompt. If you need to redefine your ICP logic, search a new data source, or change the output format, you simply update the system prompt in your script.

This moves the organization from being a passive consumer of software to an active architect of its own Go-to-Market infrastructure. This is the state of the art for Google Cloud-based automation, enabling the "100x Operator" to manage vast pipelines with minimal overhead and maximum sovereignty.

To your future lower costs,

Troy