Precision signals are the path forward

Outbound isn't just getting harder; the fundamental physics of it have changed. The asset class we have relied on for two decades—static contact data—is collapsing under the weight of entropy.

For the last ten years, we operated on the "List Buying" paradigm. You purchased a static database of 10,000 contacts from a major provider, loaded them into a sequencer, and hit send. You trusted that the data was a "source of truth."

New intelligence shows why this is now a mathematical impossibility. The "Rot Rate"—the velocity at which accurate data becomes actionable waste—has accelerated wildly due to structural workforce volatility and the permanent shift to distributed teams.

- Baseline Decay: Average B2B data now decays at ~22.5% annually.

- High-Velocity Sectors: In the tech and SaaS sectors, annual decay rates now reach upwards of 70.3%.

If you are selling into tech, your static list has a half-life of roughly six months. Beyond that point, you aren't just battling low reply rates; you are battling entropy. You are paying your expensive SDRs to chase "ghosts"—decision-makers who have churned, companies that have frozen budgets, and emails that hit "catch-all" firewalls.

The "Intent Illusion"

It gets worse. To compensate for bad data, many teams turned to "Intent Data"—IP-based signals showing a company is visiting your site. But in a distributed world, this has become the "Intent Illusion." With decision-makers browsing from residential IPs or mobile networks, legacy providers can no longer accurately resolve traffic to corporate entities. You end up swarming an account because an intern read a blog post, while missing the VP of Engineering who is researching your competitor from a coffee shop.

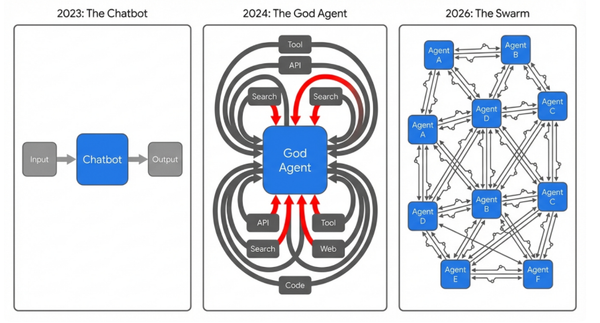

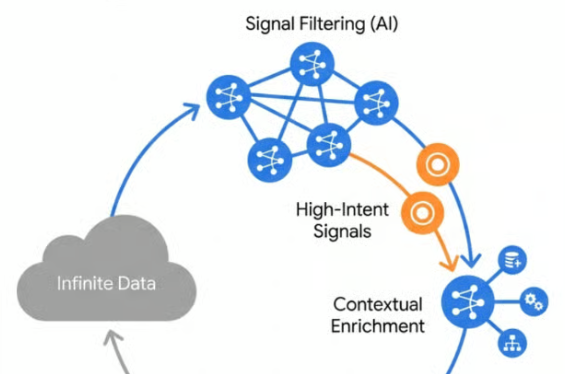

The shift for 2026 is not about buying better lists. It is about moving to "Just-in-Time" Intelligence. We are replacing the retrieval task (downloading a row from a database) with a computational task (an AI agent actively researching a target in real-time).

Here is the new operational playbook.

1. The "Nano-Trigger" vs. The "Macro-Trigger"

Most teams are still relying on "Macro-Triggers"—funding announcements or IPOs. The problem? These are highly commoditized. When a Series B is announced, that Founder's inbox is instantly flooded with 500 automated pitches. The "Time-to-Context" is too slow, and the noise is too high.

The competitive advantage lies in "Nano-Triggers." These are subtle, transient indicators of immediate pain that don't make the news.

- The Signal: A VP of Engineering comments on a niche LinkedIn post about "cloud cost optimization" struggles. Or, a developer opens an issue on a competitor’s GitHub repository complaining about API latency.

- The Implication: This is "Pre-Intent." They are problem-aware but haven't visited a review site like G2 yet. They are in the "Dark Funnel."

- The Tech: Tools like Trigify or Octolens can now monitor specific keywords in comments (not just posts). You can set up "Boolean Pain Searches" (e.g., "alternatives to <competitor>" OR "struggling with <process>") to intercept these buyers before they ever fill out a form.

2. The "Active 5%" Protocol (Solving the Scale Problem)

A common question we get: "How can I monitor my Top 2,000 accounts for these signals without getting banned by LinkedIn?"

Mathematically, you cannot scrape 2,000 profiles daily. The safe operational limit for a LinkedIn account is roughly 80-100 visits per day. If you try to monitor everyone, you will burn your accounts.

The solution is the Active 5% Protocol.

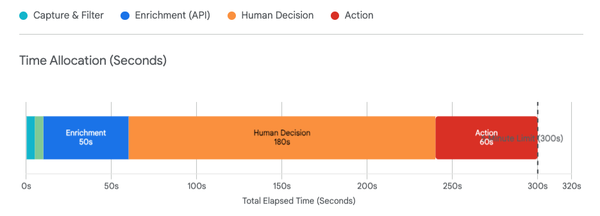

- The Audit (The Filter): Run a one-time audit on your 2,000 targets using a tool like Clay. You will likely find that only ~5% (100 people) have posted in the last 30 days. The vast majority of buyers are lurkers.

- Tier 1 (High-Velocity): Move those 100 "Talkers" into a daily monitoring pipeline (using Clay or PhantomBuster). You will catch every single thing they say, enabling you to comment or DM within minutes of a post.

- Tier 2 (The Passive Watchlist): Move the remaining 1,900 "Sleepers" to passive alerts (like LinkedIn Sales Navigator Lists). Do not waste expensive scraping resources on people who never post.

This split approach solves the "Signal-to-Noise" ratio, ensuring you never miss a Tier 1 trigger while staying 100% compliant.

3. The Agentic Future: From "List" to "Loop"

We are moving from static lists to Agentic Loops.

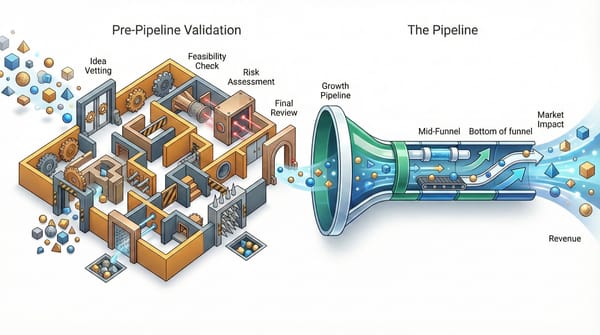

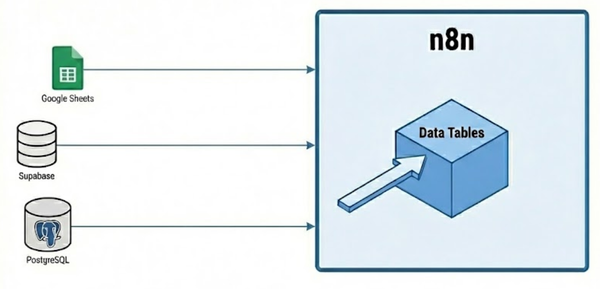

We are building workflows where AI agents (using orchestration layers like Clay) autonomously execute the entire research lifecycle:

- Detect: A signal fires (e.g., a 1-star review on G2 complaining about "API Latency").

- Verify: The agent visits the reviewer's LinkedIn profile and the company's careers page to verify they are still employed and that the company is active. This "Waterfall Verification" beats the Rot Rate.

- Draft: The agent drafts a hyper-personalized email referencing that specific G2 complaint.

The goal isn't to replace the human element; it's to remove the robotic grunt work. It’s about ensuring that when your team reaches out, they are contacting a verified buyer who has a bleeding neck problem right now.

The Takeaway:

Static data is a liability. The advantage belongs to those who build Signal Libraries. Stop selling to the list, and start selling to the signal.

Thanks for reading,

Troy