State of the Union 2026: The Pivot to Agentic Engineering

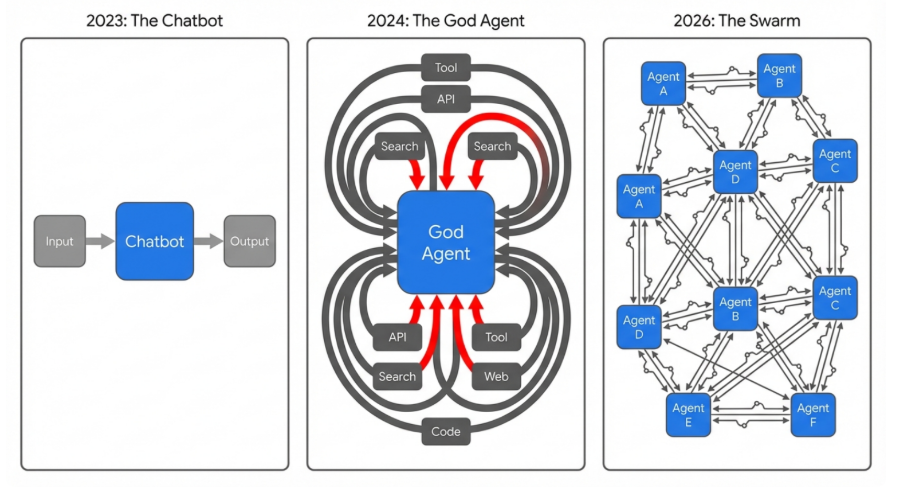

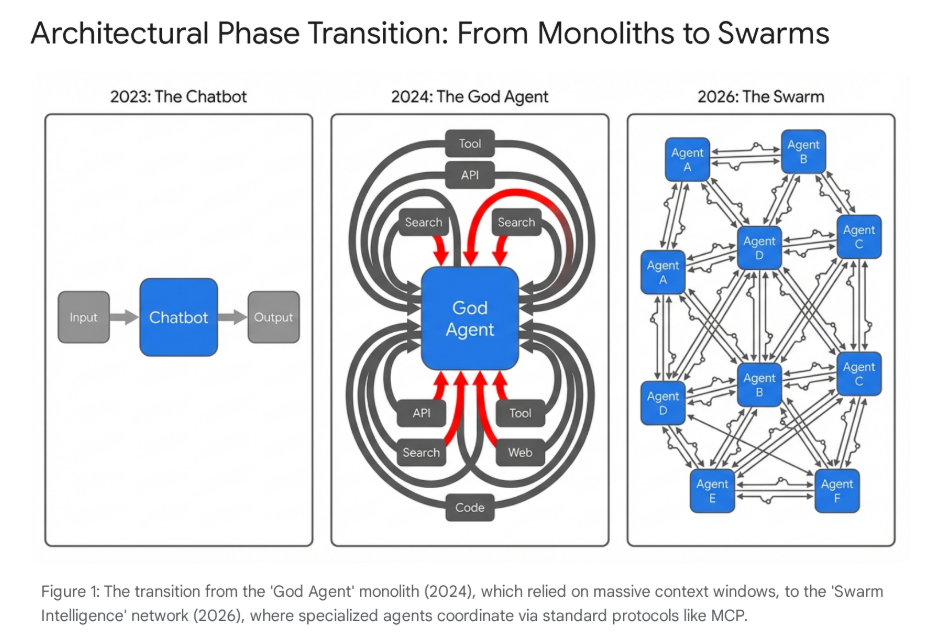

The era of the "General Purpose Chatbot" is over and the era of the Specialized Agent Swarm is truly becoming something deployable in production.

1. The Architectural Shift: Why Your "God Agent" Struggled

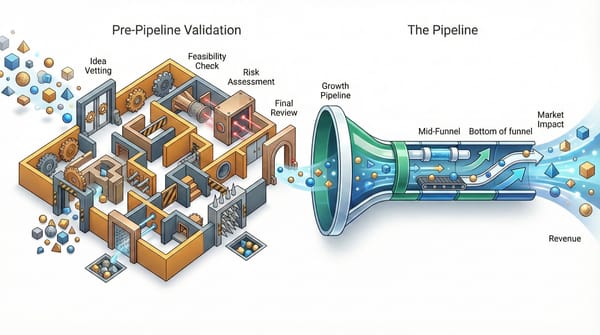

For the last two years, many teams chased the concept of the "God Bot"—a single, omnipotent AI model fed with large contexts, expected to solve complex, long-horizon problems through brute force or the "God Agent" - massive workflows that were impossible to debug or troubleshoot or monitor and were entirely unusable. Both approaches sort of worked some of the time, but not all of the time.

If your internal projects stalled, hallucinated, or burned through budget when you tried to scale them, this core monolithic approach may have been part of the problem. There are three flaws with this approach:

- The Physics of "Lost in the Middle": Even with the massive context windows of modern models like Gemini 3.x, reasoning degrades non-linearly when you flood the system. When a context window is filled with gigabytes of logs, stale tool outputs, and irrelevant history, the model struggles to retrieve the right instructions. It essentially suffers from attention fatigue, prioritizing recent noise over core directives and causing "instruction drift," where the agent forgets its primary mission halfway through a complex task.

- The Economics of "Context Shoveling": Passing the entire history of a project into a model for every single reasoning step is expensive at scale. If an agent needs to fix a simple JSON formatting error, re-processing a million tokens of history to make that one decision is inefficient. You cannot build a profitable ROI on architectures that scale costs linearly with task duration.

- The Complexity Collapse: A single agent cannot effectively be a Coder, a QA Tester, a Project Manager, and a Compliance Officer simultaneously. It lacks "separation of concerns." Conflicting system prompts (e.g., "be creative" vs. "be strictly compliant") lead to paralysis or erratic behavior.

The Winner: Swarm Intelligence

The market is now pivoting to micro-services based Swarm Architectures. Instead of expecting one genius model to do it all, successful teams are deploying networks of specialized, lightweight agents. This mirrors the evolution of human organizations: we don't hire one person to be the CEO, CFO, and Janitor; we hire specialists.

- Context Hygiene: We now build "Coder Agents" that see only the code, and "Reviewer Agents" that see only the diff and requirements. By keeping context windows focused, we maximize the reasoning performance of the model and drastically reduce costs and improve speed.

- Parallelism: Unlike a human or a single bot, a swarm can trigger five parallel "Researcher Agents" to crawl different data sources simultaneously. A Marketing Swarm can have one agent analyzing SEO trends, another reviewing competitor pricing, and a third drafting copy, all executing in parallel before merging their findings. Note that each one can use exactly the right model for its task.

- Resilience: If one agent fails (e.g., a scraper gets blocked), the system doesn't crash. The orchestrator simply retries that specific node or routes the task to a fallback agent. This is how we move from "fragile prototypes" that break on edge cases to "antifragile enterprise systems" that self-heal.

The New Standard: "Glass Box" Autonomy

For the CIOs and CTOs reading this: the days of accepting "black box" AI tools are over. You cannot govern what you cannot see. We are advising all our clients to demand Auditability in their AI stack. If an AI agent makes a decision that impacts your business, you need a forensic record of why.

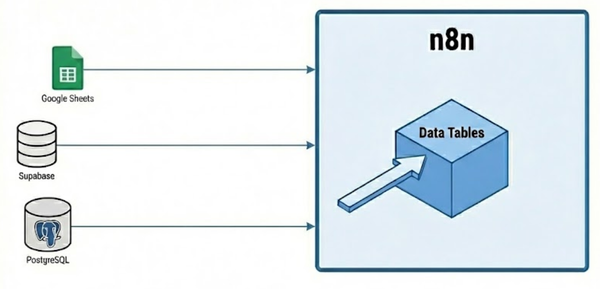

We are seeing a big consolidation around n8n as the orchestration layer of choice for the enterprise, replacing opaque proprietary platforms.

- Why it matters to you: n8n allows us to build "Glass Box" systems. Your systems must be logged in such a way that when a swarm updates a CRM, or sends a contract, you can trace what happened. This audit trail is non-negotiable for compliance in finance, healthcare, and legal sectors.

We are also championing the Model Context Protocol (MCP), the new open standard from Anthropic.

- The Benefit: MCP is the "USB-C of AI." It decouples your proprietary data from the AI model. You can wrap your internal databases in an MCP server once, and then any secure agent—whether it's running in Claude, an IDE, or a custom internal tool—can access that data via a standardized protocol. It prevents vendor lock-in and future-proofs your data strategy against the rapid turnover of AI models.

The Platform Wars: Winners and Losers

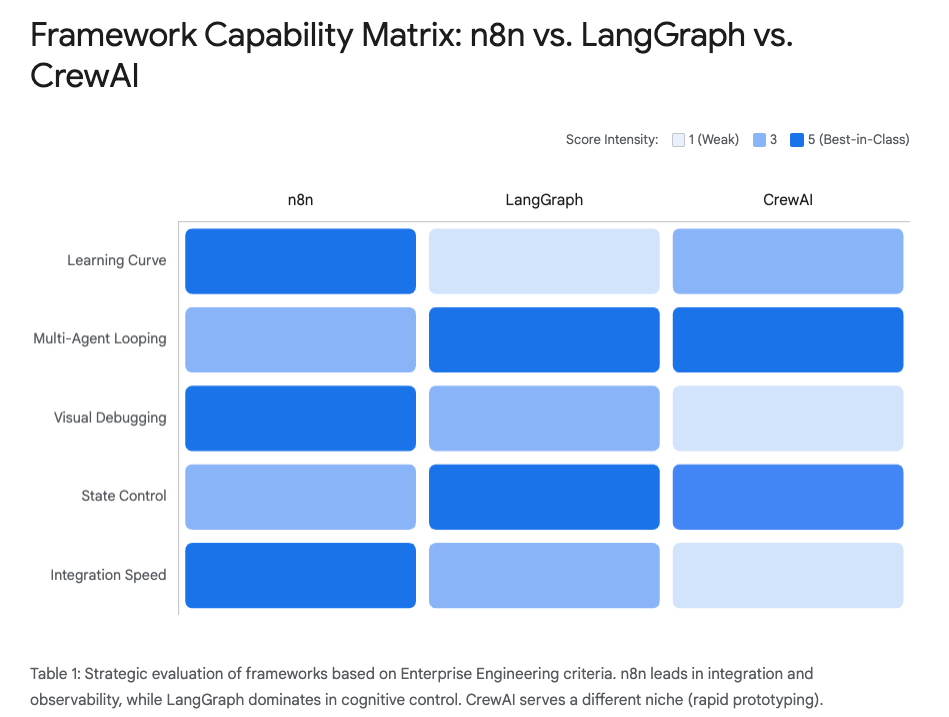

One of the most critical decisions we make as your partner is selecting the underlying infrastructure for these agents. The market has consolidated, and a clear "Framework War" has played out over the last 18 months.

- The Loser: "Black Box" Frameworks (e.g., CrewAI). In 2024, tools like CrewAI generated immense hype for their ease of use in spinning up "teams" of agents. While excellent for prototyping, they have proven to be a trap for enterprise production.

- The Trap: These frameworks often operate as "black boxes," obscuring the logic between agents. When a swarm fails in production, debugging a hidden conversation between two bots is a nightmare.

- The Friction: Integrating them with legacy systems (like Oracle or SAP) often requires writing custom Python glue code for every interaction, creating technical debt.

- The Winner: The Hybrid Stack (n8n + LangGraph). The industry has coalesced around a hybrid approach that balances control with capability.

- n8n (The Body): Use n8n as the high-level Orchestrator. It manages the business process, connects to your APIs, and provides the visual audit trail. It is the reliable "body" that executes the work.

- LangGraph (The Brain): Use LangGraph for the Cognitive Layer. Unlike linear automation, LangGraph models agents as "State Machines." It allows for sophisticated looping logic (e.g., Plan -> Execute -> Check -> If Fail, Revise Plan -> Execute).

- The Result: This combination gives you the visual governance of n8n with the complex reasoning capabilities of LangGraph—ensuring your systems are both powerful and transparent.

- [NOTE: n8n and LangGraph are overlapping more and more which I'll write about in an upcoming post.]

The Economic Pivot: Paying for Outcomes, Not Seats

The traditional software business model is broken in the age of autonomy. We are facing an "Efficiency Paradox": the better the technology works, the less sense the old billing models make.

- The Old Way (SaaS): You pay $30/user/month. This makes sense for a tool that helps a human work 10% faster. It makes no sense when an agent does the work of 10 humans. The value captured by the vendor is completely disconnected from the value delivered to the client.

- The Old Way (Hourly): You pay a firm by the hour to build code. This misaligns incentives; if we are inefficient or our agents are slow, we make more money.

The Shift: Service-as-a-Software (SaS)

We are seeing the market move toward Outcome-Based Pricing. This is a fundamental realignment of the vendor-client relationship.

- The Win-Win: Instead of buying a tool, you buy the completed work. You pay per invoice processed, per meeting booked, per background check completed, or a percentage of the savings generated.

- The Incentive: This aligns your partners with your success. It incentivizes firms like ours to build efficient, reliable agents, because every error, hallucination, or unnecessary retry now costs us money (in compute and API fees), not you.

- The "Hybrid price model": To bridge the gap, we are deploying hybrid models where a baseline retainer covers "availability and governance" (keeping the lights on, the system logging, and the kill switches active), while variable fees capture the upside of the work performed.

Governance: The Safety Mandate

I've had multiple exec conversations that sound like this: "What if the agent goes rogue?"

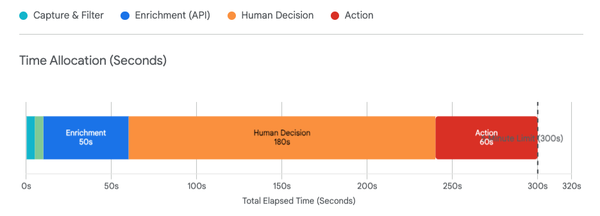

It is a valid fear. You cannot deploy autonomy without safety systems. "Governance Engineering" is now the primary differentiator between "toy" AI and "industrial" AI.

We adhere to a strict "Kill Switch" Mandate:

- Circuit Breakers: Every critical workflow should be wrapped in a deterministic code layer (non-AI). If a "Refund Agent" tries to issue a refund >$500, or a "Communication Agent" attempts to email more than 50 leads in an hour, a hard-coded circuit breaker trips. It freezes the account and alerts a human before the transaction executes. This separates the "Doer" (the AI) from the "Checker" (the Code).

- Shadow Mode: An agent should not "learn how to write data on the job" with your live systems. They can be run in Shadow Mode first—processing real production data streams but with their write-access disabled. Then you can compare their "shadow" decisions against your human operators' actual decisions to calculate a Shadow Match Rate. Only when an agent achieves a >99% match rate would you want to promote it to take over that process.

Product Strategy: Beyond "Chat with PDF"

Finally, a note on user experience. We need to stop asking people to "chat" with their data. The "Chat with your files" novelty has worn off, and it is a high-friction way to get work done for most people who are not great at prompting and don't have top-notch LLM based workflows.

The New Paradigm: Swarms that create outputs

So where does this leave us?

- The Shift: We are moving from Conversation to Completion. Users should not have to prompt an agent 20 times to get a result; they should give a high-level goal and receive a file or an output.

- The Reality: Instead of a bot that answers questions, we are building Deep Research Swarms. You give a high-level goal—"Draft a compliance matrix for this 100-page tender document"—and the swarm performs the 50+ steps of research, cross-referencing, drafting, and self-critique.

- The Output: The deliverable is not a chat bubble; it is a formatted Excel file, a Word report, or a populated database record, ready for final human signature. This shifts the human role from "Operator" to "Reviewer."

The Path Forward

The "toy" phase of AI is behind us. The winners of 2026 will be the companies that treat AI not as a magic trick, but as a workforce that requires strategic architecture, solid engineering, great governance, and constant improvement.

This pivot requires courage—to abandon the comfort of chatbots and "God Agent" massive workflows and embrace the complexity of Swarms. But the firms that make this transition now will dominate the landscape for the next decade.

Thanks for reading.

Troy