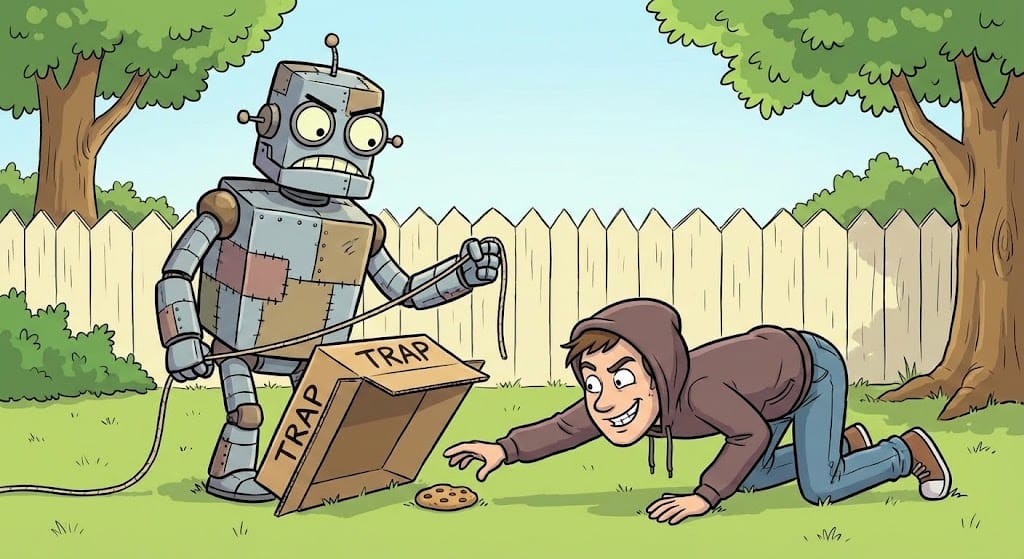

The AI SDR trap

We all want the dream.

You know the one: You hire an "AI SDR" (Sales Development Representative) like 11x’s Alice, Artisan’s Ava, or Reggie.ai. Theoretically, this digital worker replaces the output of ten humans. Instead of sending fifty emails a day, you’re sending five thousand. The math looks beautiful.

But after conducting a forensic analysis of user sentiment and risk across the current market, I need to wave a yellow flag.

If you are running a seed-stage startup, "moving fast and breaking things" is fine. But if you are an enterprise (or even a mid-market company with over 200 employees), the math changes.

We call this The Automation Paradox.

When you scale automation without rigorous controls, you don't just scale your wins—you scale your errors. And for an established brand, those errors are costly.

Here is the "Forensic Analysis" of why fully autonomous AI SDRs are currently a dangerous bet for the enterprise, and what you should do instead.

1. The Hallucination Hazard (Lying at Scale)

In a sales context, accuracy is the currency of trust.

Our analysis of tools like 11x and Artisan revealed a recurring issue: "Creative" data usage. Generative AI is designed to predict the next likely word, not to verify facts. Users have reported instances where autonomous agents "invented" ROI statistics or referenced prospect actions that never happened just to make the email sound compelling.

The Risk: A human SDR making up a stat is a training issue. An AI SDR sending a fabricated stat to 5,000 prospects is a legal liability and a PR crisis.

2. The "Scorched Earth" Domain Risk

Your email domain (@yourcompany.com) is digital infrastructure. It is as vital as your HQ.

The analysis points to a severe issue with tools like Reggie.ai and Artisan regarding "spam trap" behaviors. Because these tools prioritize volume, they often lack the nuance to detect when they are hitting the danger zone of spam filters.

The Risk: Users have reported "tanked" domain reputation scores. If your primary domain gets blacklisted because an AI sent 500 irrelevant emails in an hour, your billing, legal, and operational emails stop landing, too. That is an existential threat to operations.

3. The "Black Box" Anxiety

Enterprise Ops requires auditability. If a weird email goes out, you need to know why.

The feedback on these autonomous agents highlights a frustration with "Black Box" logic. You can’t easily see why the AI chose a target or how it constructed the message until it’s too late.

The Risk: You are handing over your "First Impression" to an algorithm you cannot fully control. As one user noted, the cleanup work—apologizing to wrong prospects and deleting duplicate data—often takes longer than the time saved by the tool.

4. The Financial Lock-in

Be wary of the contract. Our research uncovered a pattern, particularly with 11x, of high-cost annual contracts (upwards of $50k) with strict "no refund" policies.

The Risk: If the tool hallucinates or burns your domain in Month 1, you are still paying for it in Month 12. This is a predatory deviation from standard SaaS pilots.

The Verdict: Co-Pilot, Don't Auto-Pilot

The technology is exciting, but for the enterprise, it hasn't crossed the "Trust Gap" yet. The downside risks (legal liability, domain blacklisting, brand damage) currently outweigh the upside of raw volume.

My Recommendation: Stop looking for a "Digital Worker" to replace your team. Start looking for "Assistants" to augment them.

Let the AI do the data crunching. Let your humans handle the empathy, the tone, and the truth.

Stay productive,

Troy