The Architecture of Intent: Engineering Signal Processing on LinkedIn

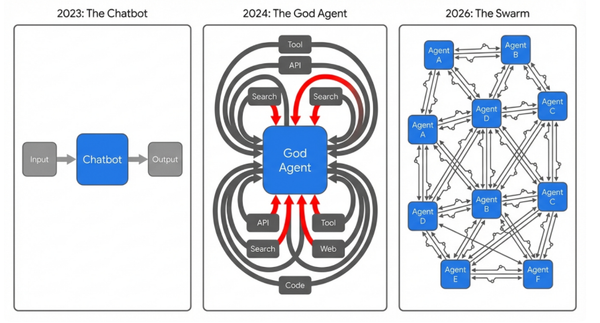

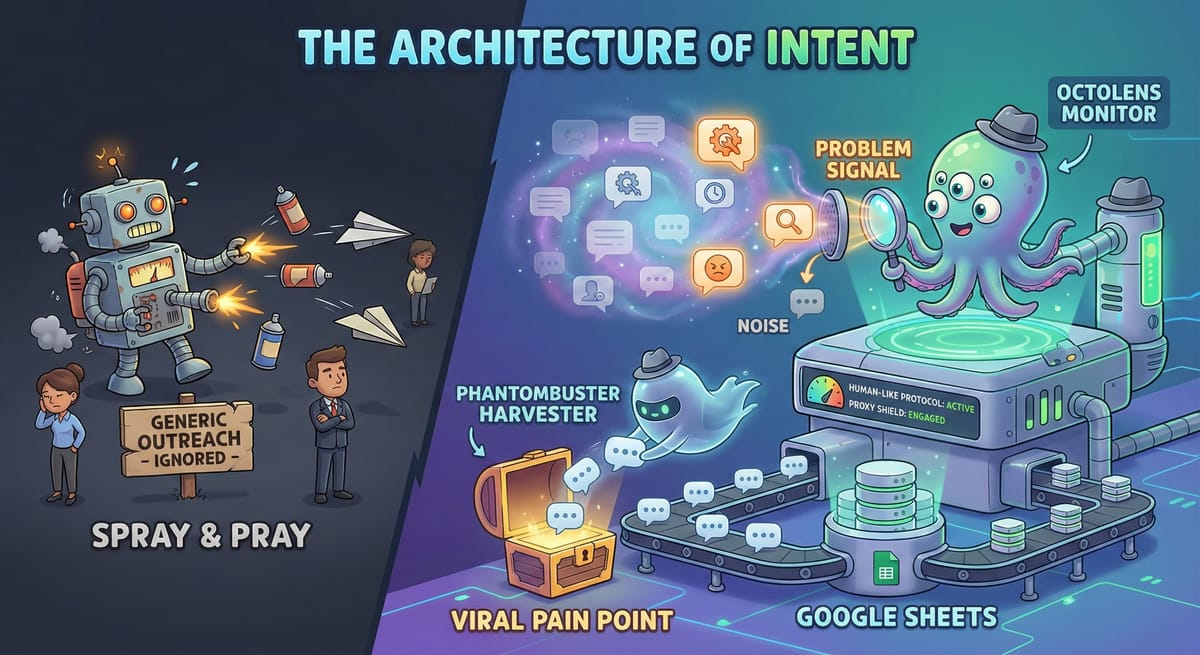

The era of "spray and pray" cold outreach is mathematically finished. The traditional methodology—high-volume email campaigns and generic connection requests—is deteriorating in efficacy due to three converging factors: oversaturation, increasingly sophisticated spam filtering algorithms, and a cultural shift where professionals reflexively ignore unsolicited communications.

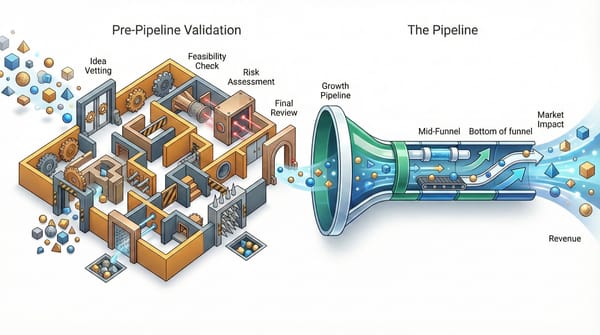

The modern Go-To-Market (GTM) competitive advantage is no longer found in better copywriting or higher send volumes; it is found in better signal processing. Specifically, the engineering capability to identify "problem-centric conversations" rather than just static job titles.

This is Intent-Based Social Selling. The objective is not to find a person with a specific title (e.g., "VP of Engineering"), but to find a person with a specific problem at a specific moment in time.

Most GTM teams fail at this because they rely on the wrong infrastructure. This briefing outlines the technical architecture required to build a high-fidelity signal processing machine on LinkedIn, moving from passive brand monitoring to active intent discovery.

The Core Problem: The API Blind Spot

To understand why your current stack is failing, you must understand the technical constraints of LinkedIn. Standard enterprise social listening tools (like Hootsuite, Sprout Social, or Brand24) rely entirely on LinkedIn's official API.

These APIs are designed for Brand Management, not Lead Generation. They operate within a strict "Walled Garden" to protect user privacy and LinkedIn's own monetization (Sales Navigator).

- What they see (Brand Level): Direct tags (e.g., "@Microsoft, your service is down") and comments on your own company page.

- What they miss (Intent Level): The "Dark Matter" of the feed—the vast majority of personal profile posts where users discuss problems without tagging a specific vendor.

For example, if a prospect posts, "I am struggling with my current CRM implementation, the database latency is killing us," but does not tag Salesforce or HubSpot, traditional listening tools will remain completely blind to this signal.

To capture this data, you must move beyond official APIs to a new class of "Grey Hat" and "AI-First" architectures that utilize browser emulation and proprietary indexing to surface these "un-tagged" conversations.

The Tooling Landscape: Monitoring vs. Extraction

To build a functional intent pipeline, you cannot rely on a single tool. You need two distinct classes of software that occupy different quadrants of the signal matrix:

1. The "Always-On" Monitor (Recommendation: Octolens)

- Function: Acts as a specialized search engine for social conversations, indexing content that is difficult to query natively.

- The AI Mechanism: Raw keyword matching on social media produces high noise. A search for "Python," for instance, might return posts about snakes rather than coding. Octolens utilizes Large Language Models (LLMs) to score mentions as "High," "Medium," or "Low" relevance based on semantic context.

- Use Case: Real-time detection of high-intent keywords (e.g., "SOC2 compliance," "slow database," "alternatives to [Competitor]").

- The Output: A filtered stream of "High Relevance" signals, stripping away the 90% of noise that plagues standard listening tools.

2. The Deep Harvester (Recommendation: PhantomBuster)

- Function: Automates browser actions to extract bulk data from specific targets. It does not "listen" broadly; it "extracts" deeply.

- The Mechanism: Uses "Phantoms" (cloud-based scripts) to simulate human browsing behavior. It can access data layers unavailable to standard crawlers, such as the full comment history of a specific post.

- Use Case: Identifying a viral post discussing a relevant pain point (e.g., a thought leader asking "What is broken in B2B sales?") and extracting every single commenter to build a highly targeted lead list.

- The Output: High-volume CSV datasets containing profile URLs, names, and headlines, ready for enrichment.

The Architecture: Bridging Signal to System

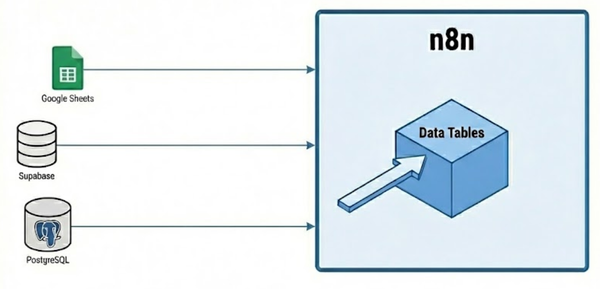

Finding the signal is only step one. The engineering challenge is moving that signal into a structured environment (Google Sheets, CRM, or Slack) without manual data entry.

There are two primary architectural patterns for this, depending on your need for agility versus volume:

Pattern A: The Real-Time Pipeline (High Agility)

- Ideal For: Immediate intervention and "speed to lead."

- The Stack: Octolens (Source) + Webhooks + Zapier/Make/n8n (Middleware) + Google Sheets (Destination).

- The Flow:

- Octolens detects a post matching your "Problem Profile" with a High Relevance score.

- It triggers a Webhook, sending a JSON payload containing the Post URL, Author Name, Content, and Sentiment.

- The Middleware parses this JSON and automatically appends a formatted row to your Google Sheet.

- Result: A live, self-populating database of active buyers that updates the moment a relevant conversation happens.

Pattern B: The Batch Injection (High Volume)

- Ideal For: Building large distinct lists for cold outreach campaigns.

- The Stack: PhantomBuster + Live CSV + Google Sheets.

- The Flow:

- You configure a "Phantom" to run a specific search or scrape a specific post every 24 hours.

- The Phantom saves results to a cloud-hosted CSV file with a permanent URL.

- Your Google Sheet uses the =IMPORTDATA("https://phantombuster.com/...") function to fetch this data automatically on open.

- Result: A daily refreshed list of hundreds of leads, ready for enrichment and sequencing.

Operational Security: Managing the "Grey Hat" Risk

Because these tools operate by simulating user behavior rather than using official APIs, they carry operational risks. LinkedIn monitors for "bot-like" activity. To ensure long-term stability:

- Adhere to Rate Limits: Do not exceed 80-100 profile actions per day on standard accounts, or 150-250 for Sales Navigator users.

- Use Dedicated Proxies: Ensure your tools are using high-quality proxies (often included in premium tiers) to mask automation footprints.

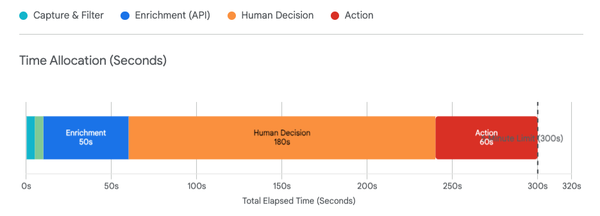

- Human-in-the-Loop: Never automate the outreach message directly from the listening tool. Use these tools to gather the data, but use a separate, dedicated sending infrastructure (or manual review) for the actual contact.

Implementation Protocol

- Audit Your Current Stack: If you are using Brand Monitoring tools (Sprout, Hootsuite) for Lead Gen, stop. They are effectively blind to the relevant conversation.

- Define "Problem Keywords": Shift your taxonomy from "Brand Keywords" (your name) to "Pain Keywords" (the problem you solve). Monitor the pain, not the product.

- Automate the Capture: If your SDRs are manually copying and pasting links from LinkedIn into a spreadsheet, your process is fundamentally broken. Deploy the webhook architecture described in Pattern A.

This architecture allows you to move from guessing who might be interested to knowing exactly who is struggling right now.

Thanks for reading.

Troy