The "USB-C" moment for Enterprise AI is finally here

The MCP platform war ended before it started and that's a good thing,

If you’ve been overseeing AI pilots over the last two years, you’re likely familiar with the dreaded "integration headache." It usually plays out like this:

Your team identifies a high-value use case—say, a customer support bot that needs to query your order database. You spend precious engineering cycles building a bespoke adapter to get GPT-4 talking to that internal SQL system. It works beautifully for a week. Then, the landscape shifts. A faster, cheaper model drops (like Claude 3.5 Sonnet), or your CTO decides to switch cloud providers to optimize spend.

Suddenly, that custom connector is obsolete. You are forced to rebuild the bridge from scratch because the API definitions, error handling mechanisms, and data schemas are completely different across providers.

Credit: Google Ultra Deep Research. Prompt by Troy Angrignon

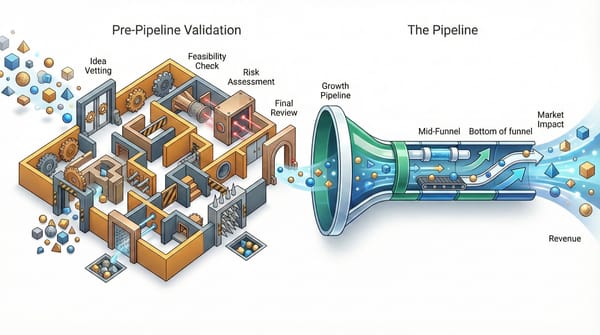

In the industry, we call this the "N×M Integration Problem." Here is the math of the nightmare: If you have 5 different foundation models you want to test (the "N") and 50 internal systems like databases, CRMs, and document stores (the "M"), you aren't just building 55 connections. You are potentially on the hook for building and maintaining 250 distinct integration pathways (5×50).

This creates a massive "technical debt" burden. Every time an API changes, multiple connectors break. It introduces security vulnerabilities at every unique touchpoint, and perhaps worst of all, it effectively freezes your innovation. When the cost of switching models is this high, you stop experimenting. You get locked into the first model you built for, simply because the switching costs are too painful.

But the era of this "connector chaos" is officially ending.

Enter the Model Context Protocol (MCP)

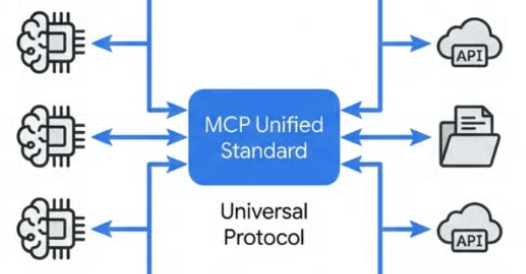

Think of MCP as "USB-C for AI."

Before USB, the hardware world was fragmented. You had Serial ports for mice, Parallel ports for printers, and PS/2 ports for keyboards. Every peripheral needed a specific physical cable and often a proprietary driver. USB standardized the interface, decoupling the device from the computer. It didn't matter who made the mouse or who made the laptop; if they both spoke USB, they worked.

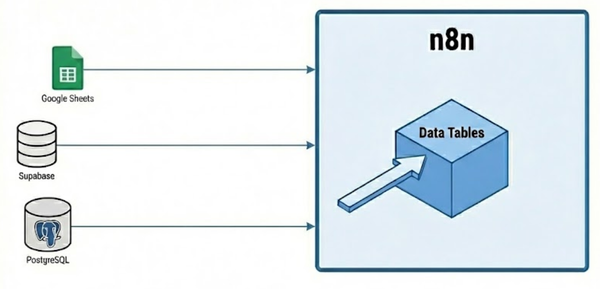

MCP performs the exact same decoupling for digital intelligence. It establishes a universal open standard that lets you build one "server" for your data (your "digital peripheral") and plug it into any AI model (the "host"). Your Postgres database, your Slack workspace, and your GitHub repository now speak a common language that Claude, Gemini, and GPT all understand fluently.

Credit: Google Ultra Deep Research.

Why this matters for your strategic roadmap:

- The "Commoditization" of the Model Layer: This is the single biggest strategic shift. With MCP, your proprietary data layer is completely decoupled from the intelligence layer. You can adopt a true "Bring Your Own Model" architecture.

- Scenario: If OpenAI raises prices next quarter, or if Anthropic releases a model that is statistically 15% better at Python coding, you can swap the "brain" of your operation without rewriting the "body" (your complex data integrations). It turns the LLM into a swappable component, much like swapping a CPU in a server, giving you massive leverage in vendor negotiations.

- The End of Walled Gardens: Standardization only works if the biggest players agree to play by the rules. In a rare moment of unity, the industry has rallied. MCP started as an Anthropic initiative, but it is now stewarded by the Linux Foundation under the new Agentic AI Foundation.

- With backing from OpenAI, Google, Microsoft, and AWS, the risk of a "format war" (like VHS vs. Betamax) has essentially vanished. This is no longer a risky bet on a niche technology; it is the new architectural baseline for the cloud.

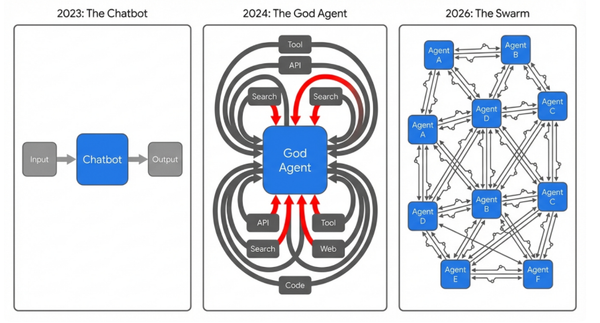

- Real Utility (The Three Primitives): MCP isn't just about piping text back and forth. It standardizes three specific capabilities that are essential for moving from "Chatbots" to true "Agentic AI":

- Resources (Passive Context): This gives the AI "read-only" access to live environments. Instead of pasting stale text into a chat window, an agent can actively "browse" your latest server logs or read a dynamic database schema to understand how to structure a query before it writes one.

- Tools (Active Execution): This allows the AI to do work in the real world. It defines a standard way for an agent to execute a SQL query, create a Jira ticket, or search a Slack workspace. Crucially, it does this within a defined safety sandbox, transforming the LLM from a passive advisor into an active employee.

- Prompts (Standardization): This solves the problem of "Prompt Drift." You can store "Golden Prompts" (e.g., a "Code Review Standard" or "Brand Voice Guidelines") directly on the server side. This ensures that every agent—regardless of which model drives it or which employee uses it—adheres to the exact same compliance and style guardrails.

The "But" (There's always a "But")

Standardization creates speed, but it also lowers the barrier to entry—which creates significant new risks. The "Connector Wars" are ending, but the "Governance Wars" are just beginning.

If it is suddenly easy for an AI to connect to your HR database or your production servers, you need to be extremely strict about who is connecting and what they can see. A standard, "naked" MCP connection assumes a trusted environment (like a developer running code on their local laptop). In a complex enterprise network, that is a dangerous assumption.

We are seeing the rise of "Shadow AI"—where well-meaning developers spin up local MCP servers to connect sensitive company data to unvetted cloud models, bypassing corporate security controls.

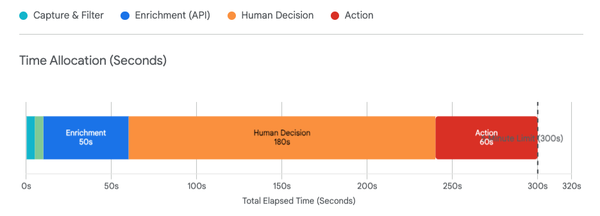

As we move from "how to connect" to "how to control," your focus needs to shift to Governance. We are witnessing the rapid emergence of "MCP Gateways"—enterprise-grade security layers (platforms like Kong, Operant AI, or MintMCP) that sit between your data and the models.

- Visibility: These gateways provide a "flight control tower" view of exactly which agents are accessing which tools.

- Policy: They enforce rate limits to prevent cost runaways and "infinite loops."

- Data Loss Prevention: They can actively scan and redact PII (Personal Identifiable Information) or sensitive IP before it ever leaves your network boundaries.

Credit: Google Ultra Deep Research

Your Action Item:

Next time you review an AI architecture proposal or talk to your CIO/CTO, ask these three strategic questions to test your organization's maturity:

- "Are we building our new integrations to be MCP-compliant, or are we hard-coding ourselves to a single vendor's proprietary API?" (Ensure you are future-proofing your assets).

- "Do we have a Gateway strategy to visualize and secure these connections?" (Ensure you aren't flying blind).

- "How are we managing Identity? Is every agent action tied to a verified human or service account?" (Ensure that an anonymous bot cannot modify your production data).

The goal here isn't just to build faster prototypes; it's to build a production-grade architecture that survives the next hype cycle and puts you in control of your intelligence stack.

Thanks for reading.

Troy