What's the big deal in n8n v2.1?

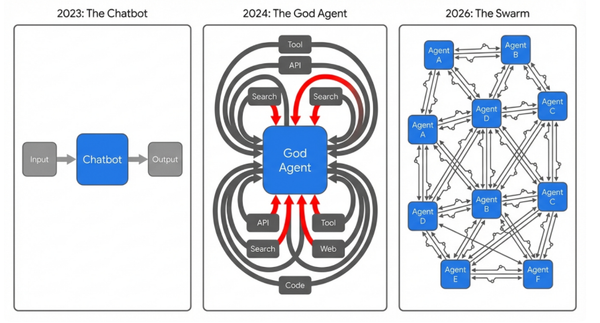

We talk a lot about "Agentic AI"—the idea that AI is moving from simply answering questions to actively performing work. But for many leaders, the gap between the idea of an autonomous workforce and the reality of deploying one has felt uncomfortably wide. Security concerns, fragility in production, and the sheer complexity of "wiring" these brains to enterprise data have stalled many pilots in the lab phase.

The release of n8n v2.1 closes that gap significantly.

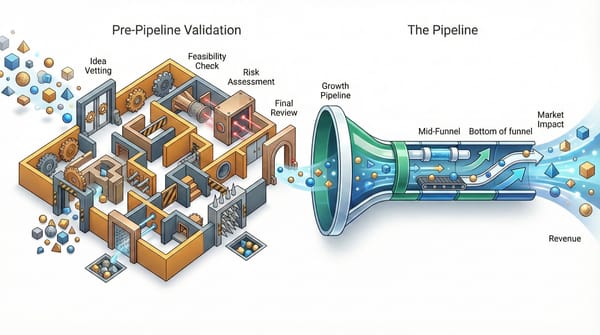

For years, automation was linear and deterministic: "If a new lead arrives in Salesforce, post a message to Slack." It was powerful, but rigid—if the data didn't match the schema, the process broke. The new n8n update represents a definitive inflection point, shifting us from that deterministic model to a probabilistic, agentic one. We are no longer just piping data; we are orchestrating reasoning.

Here is a deep dive into what you need to know about this shift, why it matters for your operations, and how to choose the right architecture for your needs.

1. Strategic Bifurcation: Personal vs. Workflow Agents

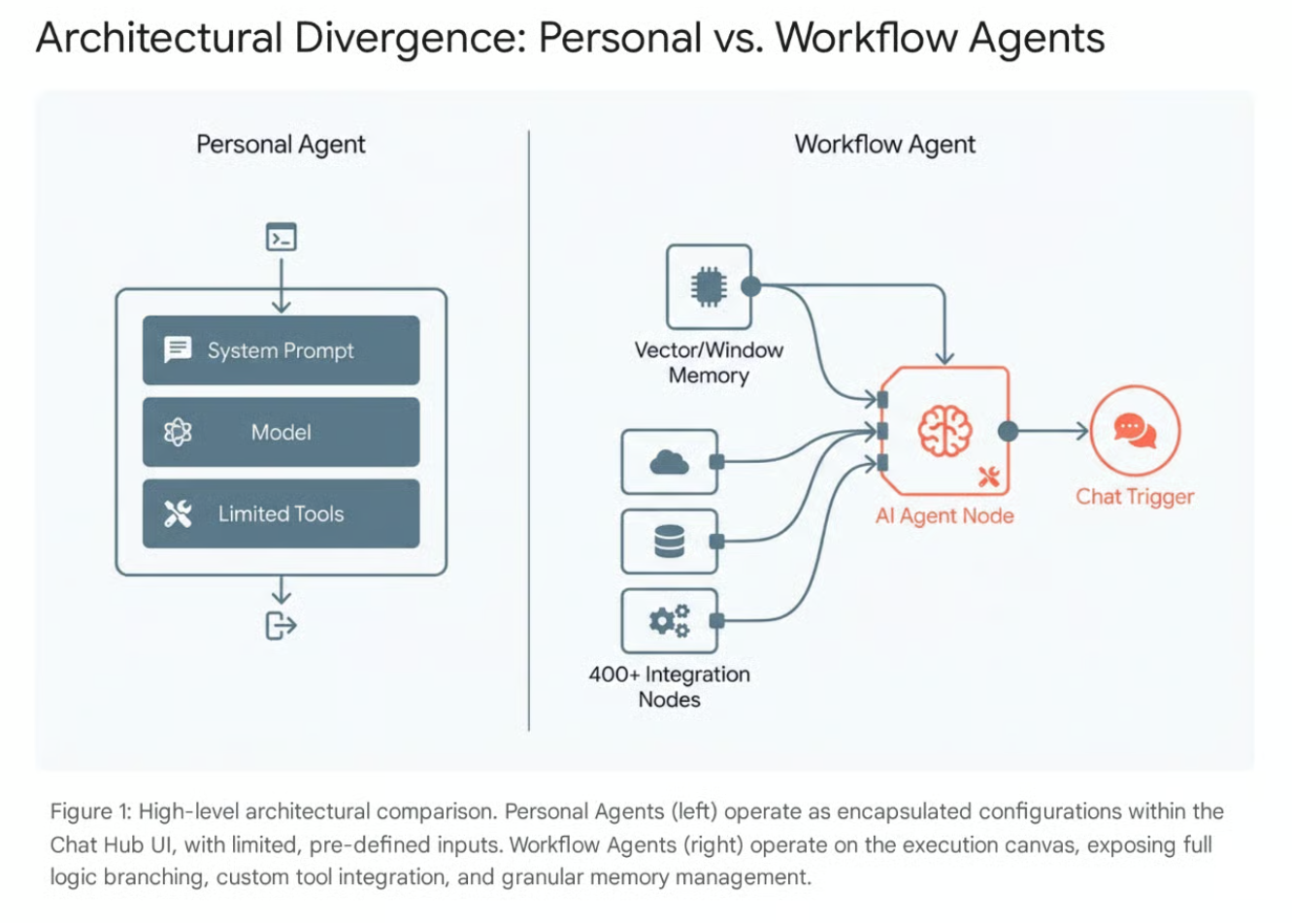

One of the smartest moves in this update is the formal bifurcation of AI into two distinct architectures. It’s no longer a "one-size-fits-all" approach; it’s about aligning the architecture with the user intent.

The Personal Agent (The "Smart Intern")

These are designed for rapid democratization and accessibility. Think of them as specialized, pre-configured assistants that live in a chat interface, aimed at non-technical staff.

- Best for: Ad-hoc, low-risk tasks like "Rewrite this marketing copy," "Explain this HR policy," or "Debug this Python script." The goal here is individual productivity augmentation.

- The Limitation: They are "walled gardens" by design. They operate on a simple prompt-response loop and lack the ability to execute complex, multi-branch logic or interact deeply with external infrastructure. They are ephemeral—once the session ends, the context evaporates. They are safe, but they cannot "do" deep work.

The Workflow Agent (The "Industrial Engine")

This is where the real enterprise value lies. These agents live on the backend canvas and handle complex, multi-step scenarios that require persistence and integration.

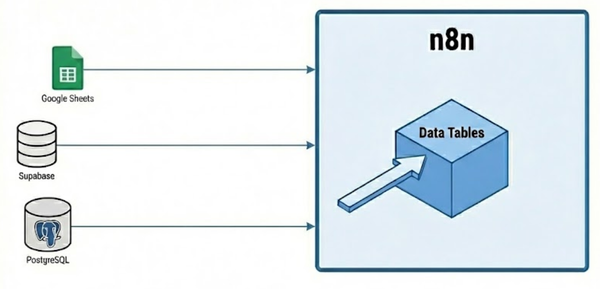

- Capabilities: They utilize the "Open Frontier" of the canvas, meaning they can use any of n8n’s 400+ integration nodes as tools. A Workflow Agent can read a ticket, query a SQL database for customer history, draft a response, wait for human approval via Slack, and then update Jira—all in one flow.

- Memory & State: Unlike Personal Agents, Workflow Agents can be engineered to possess "Long-Term Semantic Memory." By connecting to Vector Stores (like Pinecone) or databases, they can recall user preferences or project details from weeks ago, enabling "Session Persistence" that survives across different devices and interactions.

2. Operational Maturity: Why IT Will Finally Say "Yes"

Historically, "low-code" tools made CIOs nervous. They were often seen as fragile—if one workflow broke or a script hung, it could destabilize the whole instance, dropping webhooks and halting critical business processes.

n8n v2.1 addresses this "Day 2" operational risk by introducing Task Runner Isolation.

In plain English: previous versions ran code in a monolithic process. Now, v2.1 decouples execution. If an agent gets stuck in an infinite reasoning loop or tries to process a massive dataset, it crashes in its own isolated sandbox without taking down your entire automation server. This stability is a prerequisite for running autonomous agents that execute arbitrary code.

They have also introduced critical governance features:

- Draft vs. Production Modes: You can now iterate on an agent’s system prompts or temperature settings in a safe "Draft" mode without risking the stability of the live version your team relies on. This allows for "hot-fix" engineering in real-time.

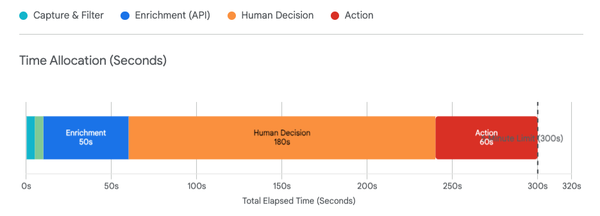

- The "Time Saved" Metric: This is massive for ROI and budget justification. You can now configure workflows to log exactly how many minutes of manual labor were saved per execution. This moves the conversation from "AI is cool" to a quantifiable P&L impact: "This Customer Support Agent saved us 83 engineering hours this month, netting a positive ROI of $4,200 after token costs."

3. The "Build vs. Code" Debate

A common question I get is: "Should we use n8n, or should we have our engineers build agents in Python using LangChain or LangGraph?"

The answer is no longer binary—it’s about the complexity profile of the task.

- n8n dominates when integration is the bottleneck. If your agent needs to talk to HubSpot, Slack, Google Drive, and a legacy ERP, n8n is vastly faster to deploy. The "piping" is pre-built, allowing you to focus on the agent's behavior rather than API authentication.

- Code-first (LangGraph/CrewAI) wins when reasoning is the bottleneck. If you are building a highly complex legal analysis bot that needs to "think" in recursive loops, self-correct code 50 times, or manage a complex state graph, a code-based approach still offers the fine-grained control needed for "Deep Tech" applications.

The Winning Strategy: The Hybrid Stack

The most sophisticated enterprises are beginning to use both in a "Headless Brain, Connected Body" architecture.

They use Python/LangGraph for the agent's "Brain" (handling the deep cognitive reasoning and state management), and they use n8n for the "Body" (acting as the API gateway, handling triggers, connecting to SaaS tools, and moving data to and from the brain). This leverages the strengths of both: the reasoning power of code and the connectivity speed of low-code.

The Bottom Line

This update signals that self-hosted, private AI agents are ready for production workloads. We are moving from simple "If This Then That" logic to a world where we can deploy "Supervisor" agents that delegate tasks to "Worker" agents reliably.

If you have been waiting to operationalize AI because the tools felt too experimental or lacked the governance controls IT demanded, that excuse just expired. The infrastructure is now ready for the workforce.

Let’s get building.

(Founder Name)

Productive AI