Why Google workspace users should go all in on Gemini

The era of "Destination AI" is ending. Here is the strategic case for consolidation.

For the last two years, the playbook for enterprise AI has been relatively straightforward: "Bring Your Own AI."

In the absence of mature enterprise tools, we improvised. We layered OpenAI’s ChatGPT Team or Enterprise subscriptions on top of our existing productivity stacks. We treated AI as a brilliant, but external, consultant—a "brain in a jar" that lived in a separate browser tab.

The workflow became a distinct, friction-filled ritual: stop what you are doing, open a new window, log in (often battling 2FA), navigate to a specific Custom GPT, manually export or copy your context, paste it in, and wait for an answer. Then, copy that answer and paste it back into your work.

It worked—it gave us immediate access to frontier reasoning capabilities—but it inadvertently created a fractured operational environment. We built data silos, normalized "shadow IT" behaviors where data leaked into personal accounts, and accepted significant operational friction as the necessary cost of innovation.

I’ve spent the last month rigorously analyzing the architectural divergence between OpenAI and Google, specifically regarding the recent maturity of Gemini 3 for Workspace. I’ve reached a conclusion that I am now advising my clients on: If your business runs on Google Workspace, we have reached a strategic inflection point.

It is likely time to consolidate your AI investment solely within Google’s infrastructure.

This isn’t a battle of chatbots (GPT-5.2 vs. Gemini 3). If it were, it would be a draw; the models are peers in reasoning and coding. This is a battle of architectures: Operating Systems versus Applications.

Here is the executive summary of why the "All-In" Gemini strategy is winning on TCO (Total Cost of Ownership) and operational efficiency.

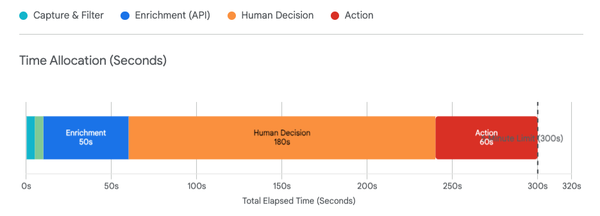

1. The End of "Destination AI" and the "Toggle Tax"

ChatGPT, even with its desktop applications, remains a "destination." It is a place you go to visit intelligence.

To use it, your team pays a continuous cognitive tax known as context switching. Research suggests that once distracted or pulled away from a task, it can take an average of 23 minutes to fully regain deep focus. Every time an employee needs AI assistance in the OpenAI model, they must digitally disengage from the document they are drafting or the email they are reading. They break their flow state to toggle windows, re-establish context, and "brief" the AI on what they are working on.

Gemini is embedded. It lives in the side panel of Docs, Drive, Slides, and Gmail. It doesn't need to be briefed; it "sees" the document open in front of you.

- The Workflow Difference:

- The Old Way (ChatGPT): To analyze a Q3 Financial Report, you must locate the file in Drive, sync it via a connector (and hope the index is fresh), or download/upload it, then prompt the AI.

- The New Way (Gemini): You simply highlight a table in your open Google Doc and ask the side panel, "Suggest three talking points for the board based on this variance." The AI shares your view instantly.

We often underestimate the friction of that "toggle tax," but at an organizational scale, it is massive. Moving to an embedded model transforms AI from a tool you have to pick up into an ambient layer of the environment you inhabit.

2. The "Live Context" Advantage vs. The Indexing Gap

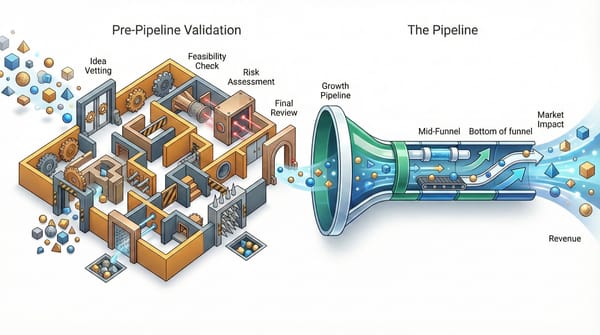

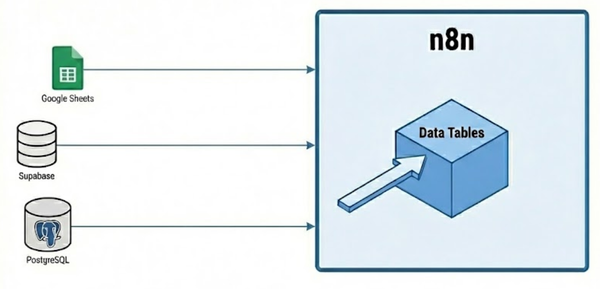

This is the strongest technical argument for consolidation. OpenAI has launched "Synced Connectors" for Google Drive, but they still face a fundamental architectural limitation: Indexing.

- OpenAI relies on indexed snapshots. When you connect ChatGPT to Google Drive, it must crawl and index your files to build a knowledge base. This creates an "Indexing Gap." For large organizations, full indexing can take hours or even days. If a policy changes in a document at 9:00 AM, the AI might still be referencing the old version at 10:00 AM. It is effectively reading yesterday's news.

- Gemini operates on live data. When you point a Google Gem (Google's version of a custom agent) at a Drive folder, it references the live files via internal Workspace APIs. It doesn't ingest a copy; it reads the source.

The Implication: There is near-zero latency between a document update and the AI’s understanding of it.

- Example: Imagine a legal team negotiating a contract. Clauses are being edited in real-time in a Google Doc. If you ask Gemini, "What is our current liability cap?" it reads the clause as it exists right now. An external AI, relying on an index from earlier that morning, might hallucinate an answer based on a version that no longer exists.

For regulated industries, law, or fast-moving agencies, the risk of an AI acting on a "cached" version of the truth is a liability they can no longer accept.

3. Security, Sovereignty, and The Trust Boundary

When you use an external AI provider, you are fundamentally sending data out. Even with robust enterprise agreements, zero-retention policies, and encrypted tunnels, you are pushing proprietary data across a perimeter to a third-party processor. This complicates compliance, requiring new Business Associate Agreements (BAAs), vendor risk assessments, and constant vigilance against data leakage.

Google’s architecture relies on a Trust Boundary. Because your emails, docs, and sheets already live in Google’s data centers, processing them with Gemini requires no data egress. The model comes to the data; the data does not go to the model.

- The "ACL Nightmare" Avoided: One of the hardest things to manage in external AI is Access Control Lists (ACLs). If you sync your company's Drive to an external vector database, you risk losing granular permissions (e.g., "Only HR can see salary data").

- Inherited Governance: Gemini automatically respects the permissions you’ve already set in Workspace. If a junior analyst doesn't have access to the "Executive Strategy" folder in Drive, they cannot ask Gemini to summarize it, even if the Gemini agent has access. The security model is user-centric, not agent-centric.

- Unified Compliance: It operates within the existing compliance controls of your Workspace. If you have Data Loss Prevention (DLP) rules that block credit card numbers in Gmail, Gemini respects them. Crucially Gemini for Workspace operates within the FedRAMP High authorized boundary of the platform.

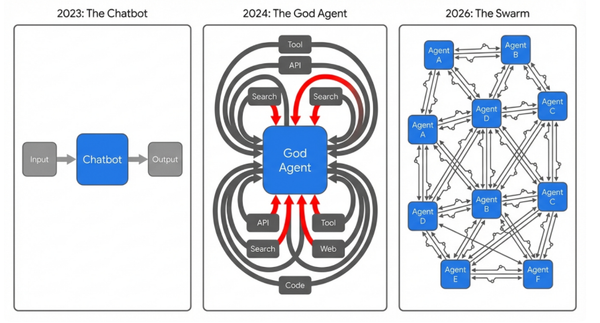

4. From Chatbots to "Digital Workers" (The Agentic Shift)

Finally, the maturity of Google Workspace Studio (formerly Flows) changes the game from "chatting" to "automating."

ChatGPT is largely reactive; it waits for you to prompt it. It requires a human driver to initiate every interaction.

Google’s new workspace is event-driven. Because the AI sits inside the Operating System, we are moving toward agents that "wake up" based on system triggers. We can now script "Digital Workers" that run in the background:

- Scenario: The Account Management Bot

- Trigger: A new email arrives from a key domain (e.g.,

@client-name.com) containing the word "Urgent." - Action: The agent analyzes the email sentiment, looks up the client's latest status in a Google Sheet, drafts a prioritized response for the Account Manager, and pings the team in Google Chat.

- Trigger: A new email arrives from a key domain (e.g.,

- Scenario: The Onboarding Agent

- Trigger: A new file is added to the "New Hires" folder.

- Action: The agent reads the resume, generates a personalized "First Week Roadmap" Doc, and schedules introductory meetings with the relevant department heads on the calendar.

This shifts us from Human-in-the-loop (you helping the AI write) to Human-on-the-loop (you supervising the work done by agents). Building this level of automation with OpenAI currently requires a complex "glue" of third-party integration tools (like Zapier or Make) which introduces yet another vendor, another bill, and another security surface area. With Google, the event listeners are native.

The Bottom Line: Reducing the "Integration Tax"

There may still be edge cases where OpenAI makes sense but more often than not, for the core operations of a business—Sales, HR, Legal, Operations, and Creative—the friction of managing two separate vendors is becoming impossible to justify.

Consolidating on Gemini eliminates the "integration tax," simplifies identity management (one login, not two), and perhaps most importantly, gives your AI direct access to the single source of truth: your live files.

Take a look at your current stack. If you are paying for ChatGPT Enterprise while your data sits in Google Drive, you are paying a premium for friction.

Thanks for reading.

Troy